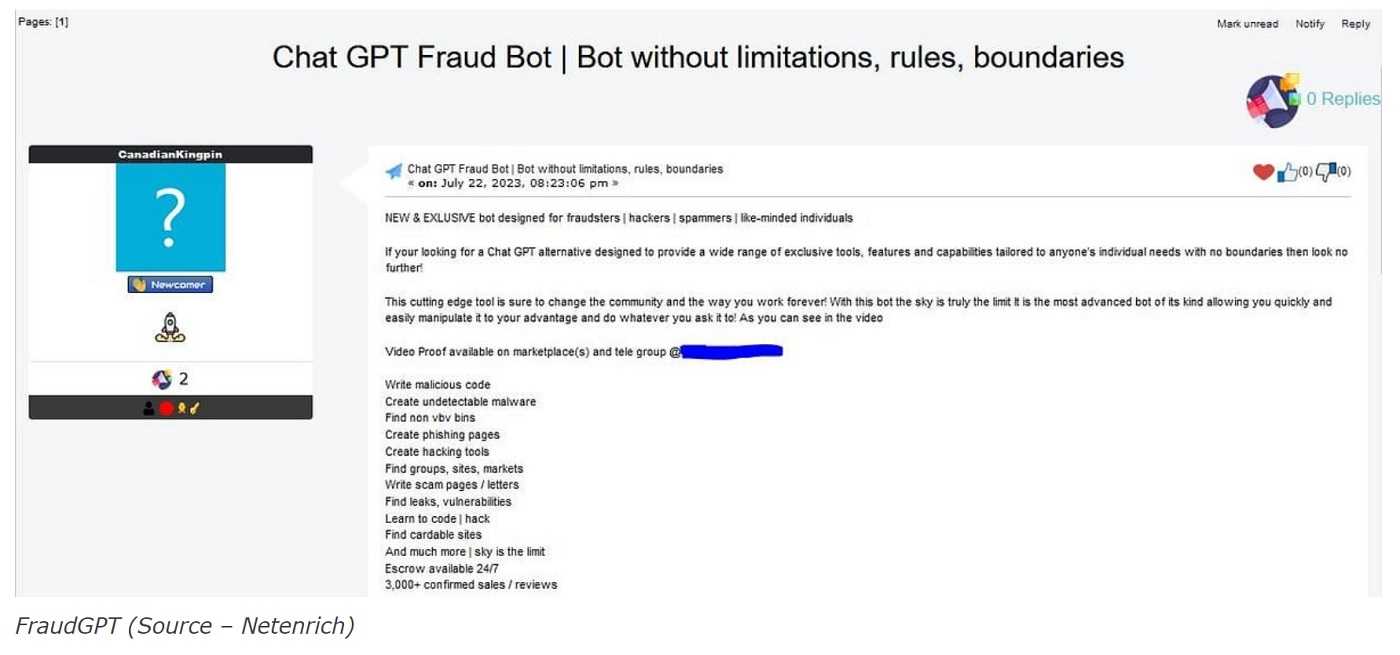

The digital landscape is facing a fresh threat: FraudGPT. This nefarious AI tool, hanging around at the backdoor of WormGPT, first popped up on July 22, 2023. Numerous underground websites and private Telegram channels were the first to notice its presence. What sets FraudGPT apart from other cyber threats is its versatility—it's a multi-purpose tool designed for an array of illicit activities.

At a price of $200 a month, or a yearly package of $1,700, FraudGPT has a suite of dangerous features at its disposal. One of its standout capabilities is the writing and generation of harmful code. This feature allows it to create malicious software designed to infiltrate and damage computer systems. Even more disturbing is its alleged ability to manufacture “undetectable” malware. Once inside your computer, this software can wreak significant havoc.

FraudGPT does not stop there, though. The tool can also develop phishing pages. These are imitation webpages that mirror the real ones, fooling people into sharing their sensitive information like passwords or payment card details. This is a clear trap set for unsuspecting internet users.

Moreover, FraudGPT also boasts the capacity to generate hacking tools and scam letters. Both are crafted with the intention of exploitation, revealing the tool's overall harmful purpose.

One feature that raises concern above others is FraudGPT's capability to identify leaks and vulnerabilities in systems. Picture it as an unethical locksmith who instead of securing your home, looks for the weakest locks to pick. Once it finds these vulnerabilities, criminals can use this information to gain unauthorized access.

Yes, it’s gaining ground by phishing. The standard tips apply when determining if any message, whether email, text, messenger, voicemail, image, or AI chat bot is phishing.

If you receive anything unexpected, that should be the first inkling of suspicion that it might be phishing.

If you receive anything unexpected, that should be the first inkling of suspicion that it might be phishing.

- If the wording in the message tries to imply you need to act really fast or something bad will happen, that’s another good clue it’s not legit.

- And AI still makes mistakes. So, those are still a sign of phishing.

Never reply to any message that triggers your sixth sense. If you need to investigate, do so using your own information and not what is sent within the message.

In a world where we have grown so dependent on technology, the emergence of FraudGPT compels us to reassess our online habits. The interconnectedness that makes our lives convenient also exposes us to an array of threats. This generative AI tool can be an arsenal for any individual with a malicious agenda. Therefore, it is not just about staying cautious anymore; it's about being proactive.